Singular Value Decomposition

Singular Value Decomposition (SVD) is a factorization of a matrix into three matrices: U, S, and V. It can be written as:

where A is the original matrix, U and V are orthogonal matrices, and S is a diagonal matrix. The elements of S are called the singular values of the matrix A.

- U Matrix: The U matrix contains the left singular vectors of the matrix A. These singular vectors are orthogonal to each other and span the row space of A. The number of columns in U is equal to the number of rows in A.

- S Matrix: The S matrix is a diagonal matrix that contains the singular values of A in descending order. The singular values are positive real numbers and they measure the importance or magnitude of each singular vector.

- V Matrix: The V matrix contains the right singular vectors of A. These singular vectors are orthogonal to each other and span the column space of A. The number of columns in V is equal to the number of columns in A.

Properties

- Rank: The rank of a matrix is equal to the number of non-zero singular values in the S matrix.

- Orthogonality: The matrices U, S, and V are orthogonal to each other, meaning that their transposes are also their inverses.

- Pseudoinverse: The SVD can be used to compute the pseudoinverse of a matrix, which is a generalization of the inverse matrix to non-square matrices. The pseudoinverse can be used to solve linear equations that are not solvable using the standard inverse.

- Matrix Factorization: SVD can be used for matrix factorization, which is the process of representing a matrix as the product of two or more lower-dimensional matrices. This factorization can be useful for reducing the computational complexity of algorithms, improving the interpretability of the data, and discovering meaningful structure in the data.

- Robustness: SVD is a robust technique that is not affected by outliers and is able to preserve the important information in the data. This makes it useful in fields such as image processing and data analysis, where outliers and noise are common.

- Low-Rank Approximations: SVD can be used to find low-rank approximations of matrices. This involves retaining only the top k singular values and corresponding singular vectors, where k is much smaller than the dimensions of the original matrix, it is possible to obtain a rank-k approximation of the matrix that captures most of its essential structure. This reduces the computational cost of SVD.

Implementation

import numpy as np

# define the matrix for decomposition

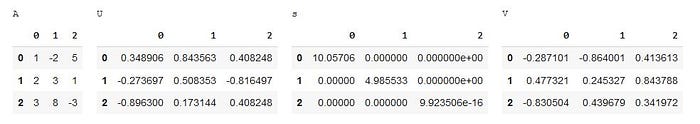

A = np.array([[1, -2, 5], [2, 3, 1], [3, 8, -3]])

# perform singular value decomposition

U, s, V = np.linalg.svd(A, full_matrices=False)

In this example, the matrix A is decomposed into three matrices, U, s, and V, using the numpy.linalg.svd function. The full_matrices argument is set to False to obtain a compact singular value decomposition.

The original matrix A can be reconstructed from U, s, and V using the following formula:

A = np.dot(U, np.dot(np.diag(s), V))Types of SVD

There are a few variants of SVD which are important to know about:

Truncated SVD

Truncated SVD is a variation of SVD that involves keeping only the top k singular values and corresponding singular vectors. This reduces the computational cost of SVD while retaining the most important information of the original matrix.

Economical SVD

The economical SVD is a variation of SVD that involves computing only the first k singular values and singular vectors, where k is much smaller than the dimensions of the original matrix. This reduces the computational cost of SVD for matrices with a large number of columns.

Randomized SVD

Randomized SVD is a variation of SVD that uses randomization techniques to speed up the computation of SVD. It is useful for large-scale matrices where the standard SVD computation is computationally expensive.

Extensions of SVD

SVD has been extended to more complex data structures, such as tensors and graphs. Tensor SVD and Graph SVD are useful for analyzing complex data structures in fields such as computer vision and network analysis.

Applications

- Dimensionality Reduction: SVD is often used for dimensionality reduction by keeping only the k largest singular values and corresponding singular vectors. This reduces the size of the data while retaining most of its important information.

- Image Processing: SVD is used in image processing for tasks such as image compression, denoising, and image restoration. By removing the low-rank components of the matrix (i.e., the small singular values and corresponding singular vectors), we can remove the noise from the data.

- Recommender Systems: In recommender systems, SVD is used to factorize the user-item interaction matrix into two low-rank matrices, which can then be used to make recommendations to users.

- Natural Language Processing: SVD is used in natural language processing for tasks such as text classification, sentiment analysis, and topic modeling.

- Latent Semantic Analysis: Latent semantic analysis is a method for analyzing the relationships between a set of documents and the terms they contain. SVD is used to factorize the term-document matrix into two low-rank matrices, which can then be used to identify the latent topics in the documents.

Other Similar Methods

There are many other methods which are quite similar like Singular Value Decomposition (SVD), such as Principal Component Analysis (PCA), Eigenvalue Decomposition (EVD), and the Moore-Penrose pseudoinverse. The difference lies in their purpose and the type of matrices they can handle:

- Singular Value Decomposition (SVD): SVD is a factorization of a matrix into three matrices, namely the left singular vectors, the singular values, and the right singular vectors. SVD is capable of handling any type of matrix, including rectangular matrices, and it is a useful tool for dimensionality reduction, image processing, and data analysis.

- Principal Component Analysis (PCA): PCA is a dimensionality reduction technique that uses SVD to find the directions in the data that have the highest variance. PCA involves computing the eigenvectors of the covariance matrix of the data and retaining the top k eigenvectors that capture the most information. PCA is useful for reducing the number of features in a dataset while retaining the most important information.

- Eigenvalue Decomposition (EVD): EVD is a factorization of a square matrix into three matrices, namely the eigenvectors, the eigenvalues, and the inverse eigenvectors. EVD is only applicable to square matrices and is used to find the eigenvectors and eigenvalues of the matrix, which are useful for understanding the structure of the matrix.

- Moore-Penrose Pseudoinverse: The Moore-Penrose pseudoinverse is a generalization of the inverse matrix that can be used for any matrix, including rectangular matrices. The Moore-Penrose pseudoinverse is defined as the matrix that minimizes the residual when it is multiplied by the original matrix. The Moore-Penrose pseudoinverse is useful for solving linear systems of equations when the matrix is not invertible.

Conclusion

Thus, we saw that SVD is a powerful and widely used tool in linear algebra and has many applications in fields such as data analysis, machine learning, image processing, recommender system, natural language processing and much more.

If you find this article useful, please follow me for more such related content, where I frequently post about Data Science, Machine Learning and Artificial Intelligence.